So you’ve created a new product landing page for your e-commerce business. You’ve waited a week since the landing page went live but have yet to experience any conversions. In the meantime, you’re searching for ways to make a more significant impact and attract customers.

A/B tests, also known as split tests, are a standard way businesses test UI/UX experiences, shopping cart conversions, new products, and more. Should you run an A/B test to see which variation of the page works better? You’ve thought about implementing different call-to-action copy or adding a video to the page, but you’re unsure which change will result in a better conversion rate.

This is where CRO in marketing comes in. You decide to A/B test a different CTA headline against the page’s current CTA headline before making additional changes to the page. You want to make sure you’re making a decision based on trustworthy result data, but what happens when you run the test and your data is skewed … and you don’t know what to do next?

How to Make Sure Your

E-commerce Optimization Is On Point

The reasons for CRO A/B testing can vary, but the results provide beneficial feedback on how to improve immediately, pivot direction, or search for other solutions to a problem on a website. Skewed data doesn’t always correlate with “failure,” though it may raise some red flags. But how do you know when you’re dealing with skewed data?

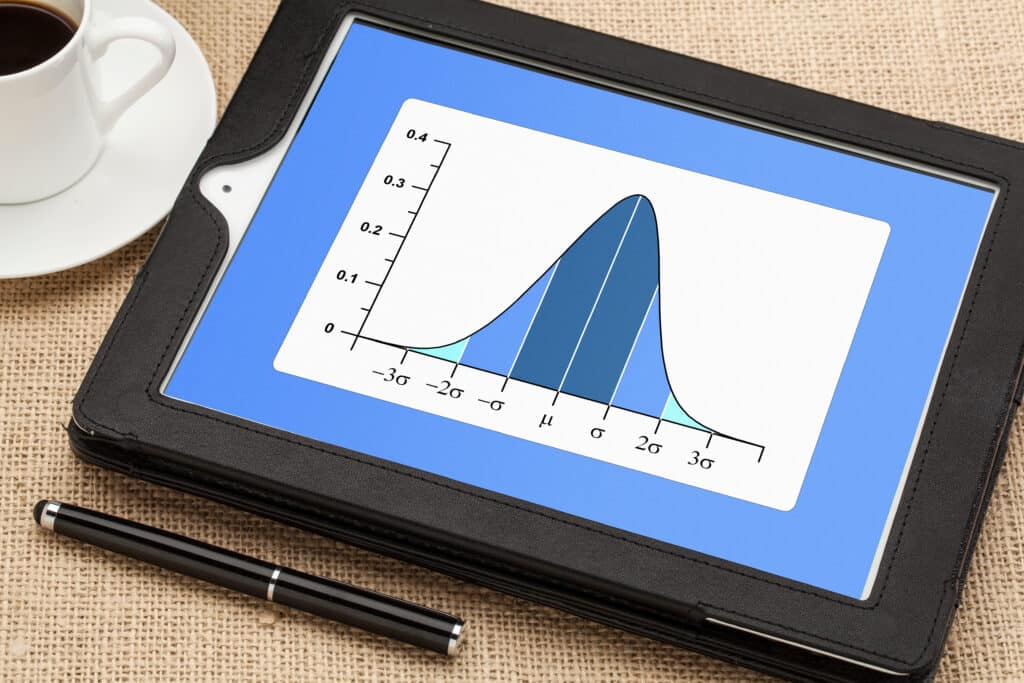

You can easily see skewed data on a bell curve graph or report because it measures the asymmetry in the set. When you see a right slant, it’s generally correlated with a positive, while a left slant is negative. Investigating the root cause is the best course of action when you experience skew because CRO is all about mitigating risks and lessening the effects of any “less than desirable” results.

But you’re still stuck: What is causing the data to skew, and how can you fix it? We’ll discuss various causes for skewed data from CRO A/B testing, what they mean, and how they can still provide valuable insights.

What Factors Contribute to Skewed Results in CRO A/B Testing?

Before you run split tests, you should always dedicate time to pre-plan them. Pre-planning helps determine the statistical viability of running a test before you start it. However, pre-planning tests doesn’t guarantee you won’t see skewed results that require investigation. There are a few factors that contribute to skewed A/B test results, which may include:

- Lack of sample size

- Sample ratio mismatch

- Oversampling

- Not performing segmentation analysis

Lack of sample size

Lack of sample size means the amount of data your test has brought in so far isn’t enough to provide a statistically meaningful result.

How to fix it: Many A/B testing tools provide a statistical significance calculation in their reporting. Alternatively, you can use a free A/B testing calculator to help you quickly understand if your experiment has enough data to be statistically significant, or has a large enough sample size to provide actionable insights. If your test results aren’t statistically significant, then you’ll have to evaluate whether to keep the test running, or end it and move on. However, be mindful of the parameters that you pre-planned; running a test for too short of a timeframe directly contributes to lack of sample size.

Sample ratio mismatch

Sample ratio mismatch (SRM) happens when the variants in your test have unbalanced traffic and don’t fit the expected traffic ratio. For example: Say that your total sample for an A/B test is 300 people. If you split this number up 50/50, it means approximately 150 people should hit each variation (with a small degree of variance due to randomized variation assignment). When a sample ratio is mismatched, one variation may have 100 people while the other has 200.

How to fix it: Some A/B testing tools have built-in SRM monitoring. You can use an online SRM checker or manually do the calculations on your own.

Oversampling

Oversampling happens when too many users are included in test results who didn’t experience the change that you’re testing. For example: If you ran a test on products with color swatches, your test data should only include users who actually hit pages for products that have color swatches. If you oversample, your test data may include anyone who visited any type of product page, or even worse, everyone who visited your site. This would skew your test and provide inaccurate results.

How to fix it: Ensure your test is set up to only include users who will experience the change being tested. Having well-defined, specific targeting and audience conditions for your experiment will help mitigate oversampling.

Not performing segmentation analysis

Segmentation analysis is when you look at your test results by particular segments or divisions, such as reporting on mobile vs desktop traffic, or new vs returning users. Lack of segmentation analysis on CRO A/B testing results can lead to misinterpretations of the data.

How to fix it: Segmenting the results helps you gain context into how users interact with your site. For example, a mobile user’s experience vs a desktop user’s experience. The segmented results may show that a particular call-to-action is helpful for mobile users but not desktop users. The overall results may look flat, but you can pull two actionable bits of information from segmenting the results to see what e-commerce optimizations you should implement for one audience (mobile) versus another (desktop).

Once you pinpoint the reason, or reasons, behind the skewed data from your test, it’s important to consider the implications of your results. Skewed results can add value by highlighting what needs to be fixed in your split test design to provide insights that drive desirable changes to your website’s conversion rate. Sometimes, skewed data may also just mean you’ve misinterpreted the test results and need to look deeper into what the data is revealing.

Transform Insights into

E-commerce Optimizations

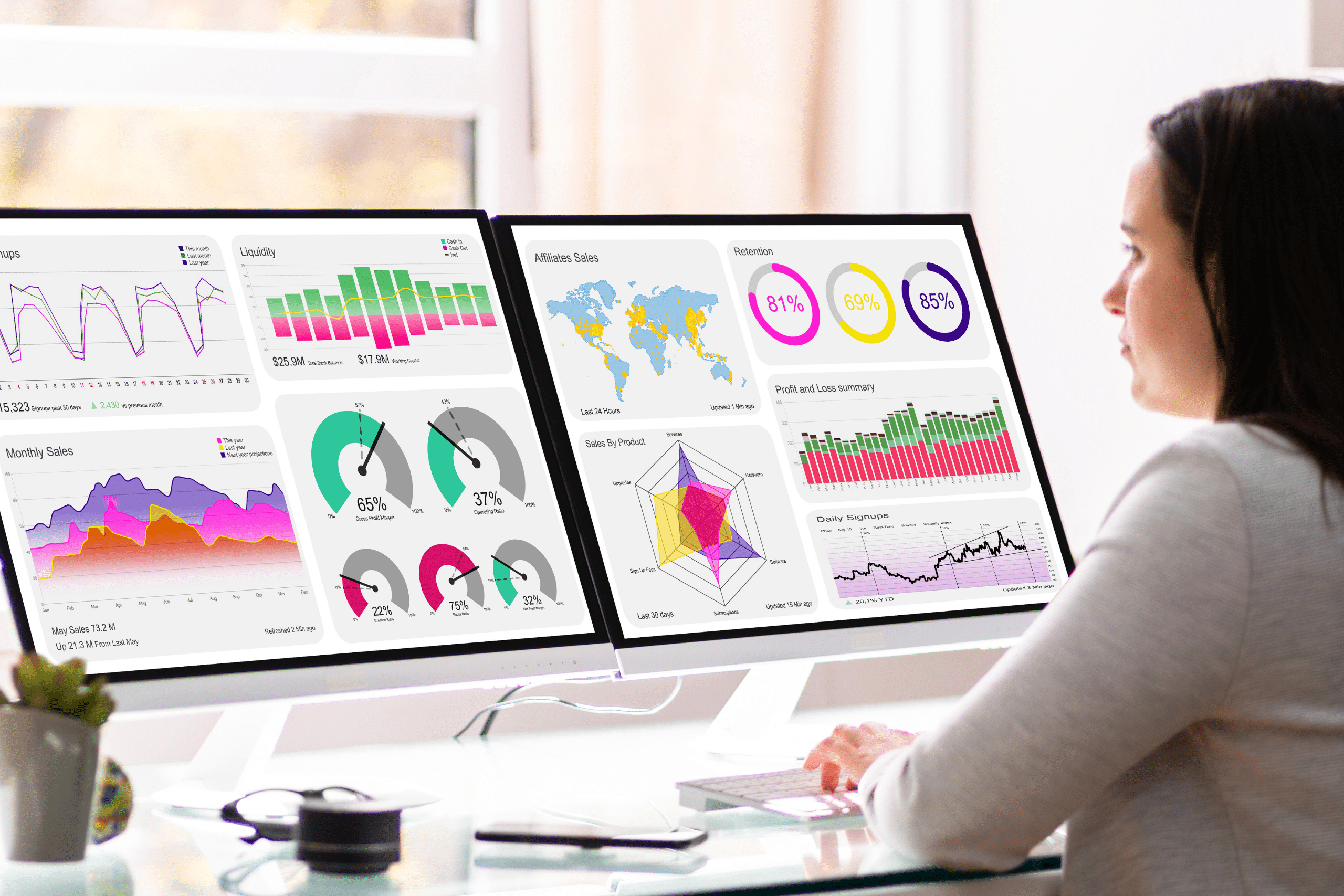

When done right, CRO in marketing is a highly collaborative process. At Omnitail, we help clients identify opportunities that benefit your customers and your bottom line. We believe in saves and don’t label negative test results as “failures” because every test we run provides insights that lead to strategically sound decisions.

With each test, we’ll walk you through our expert recommendations that advise you on what optimizations may work best and what changes to avoid. A testing program can be a burden to manage, which is why we’ll help you establish a website testing process and standardize execution methods to ensure consistency and efficiency. Free up your time to focus on what really matters when running your e-commerce business.

CRO is a long-term, strategic process that helps you improve your website’s performance and monetization over time. It focuses on the wins and the meanings you can derive from the data.

The Omnitail Difference

Omnitail is disrupting the digital marketing landscape with our unique profit-driven approach to e-commerce marketing. But annual growth requires your e-commerce business to continuously improve on these three metrics: Increase traffic, improve monetization, and boost margins.

Lucky for you, we’re ready to get all three of these back on track for you. We increase traffic by sending the right traffic at the right time using our profit-driven performance marketing strategies. We improve monetization by continuously researching, designing, and testing to give you clear instructions on how to elevate your campaigns’ conversion rates. And we boost margins by accounting for the metrics that ad platforms usually miss: COGS, variable overhead, returns and cancellations, and more.